Hyper Familiar

Hyper familiar is a site-specific Augmented Reality experience which amplifies people’s sensory perception, encouraging them to stay outside the usual patterns of perception and explore the ‘’essence’ of a site.The project is situated in the public garden of St Dunstan in the East in the City of London. It is a historical site that holds a deep seated architectural tracery that reveals and narrates it’s past. Using the full audio-visual potential of augmented reality, the design intends to add an amplified sensory architectural layer, reframing and defamiliarizing the historic urban environment.

The cybernetician Gordon Pask says “Man is prone to seek novelty in his environment and having found a novel situation, to learn how to control it.” Hyper familiar offers sufficient variety to provide a potentially controllable novelty through imparting the sensation of environments as they are de-familiarised with interactive digital augmented language. It impels people to explore, discover, and explain his surroundings between reality and abstract, forming a new and hyper way to appreciate all the living relationships of experience.

“Defamiliarization” theory, an artistic technique and a significant concept of Russian Formalism, meaning to encourage people to see daily things as strange, wild and unfamiliar, is our primary design methodology. Its purpose is to impart things as they are perceived and not as they are known.

We chose St Dustan in the east garden(London) as Hyper Familiar experiment site for it is a multi-layered historic site which endows an apparent spirit of a place. It used to be an old church and after suffering several damages now used as the public garden but still preserves many past traces. In the past, Grinling Gibbons did some wood carvings for the old church. Today we are trying to add some digital ‘filigree’ for it.

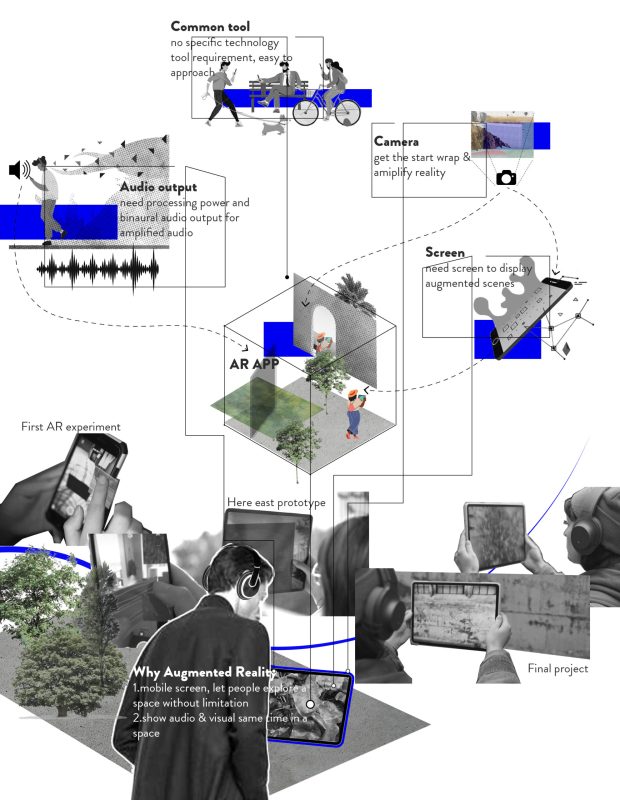

AR seems to be the perfect and only solution to implement our concept, for it could do both audio and visual amplification simultaneously in space. It is achievable for everyone to get. With the mobile device’s camera, we could wrap and amplify reality with a minimum gap.

The parameters that make these effects happen and change are the distance and angle between the iPad’s camera and the anchors that are set according to the relative position. They are calculated at all time to keep refreshing the frames. When the number reaches a specific range, the visual effects manipulated from the camera captured image will start, and some binaural sound rings out.

We chose several textures with strong and apparent contrast feelings as design objects in the site to form a contrast between different feelings. Besides, the location of each effect was considered in order to arrange the entire sensory map. In this way, the experiencer can enjoy a unique narrative sensory experience lead by himself.

The visual effects design is based on the objects’ texture properties and related to the site. Whether it is the decayed wall worn away by time or the rustling leaves associated with natural vitality, they all try to amplify people’s sensory perception of ordinary things.

The sound is also designed based on the original material we collected before with Zoom H5 Recorder. Granular Synthesis is our primary method. It means the sound is split into small pieces which called grains. Multiple grains may be layered on top of each other and may play at different speeds, phases, volume, and frequency, among other parameters, finally making something different.

Hero Images

References

Merleau-Ponty M. Phenomenology of perception[M]. Motilal Banarsidass Publishe, 1996.

Crawford L. Viktor Shklovskij: Différance in Defamiliarization[J]. Comparative Literature, 1984: 209-219.

Pask G. A comment, a case history and a plan[J]. Cybernetics, art and ideas, 1971: 76-99.

Kenderdine S. Embodiment, entanglement, and immersion in digital cultural heritage[J]. A new companion to digital humanities, 2015: 22-41.